PathFinder Project

For my senior design class we had to spend one semester coming up with a product and another semester prototyping it. My team’s idea was a heads up display (HUD) that could project directions and vehicle metrics onto a driver’s windshield. During the protoype phase, me and another group mate were tasked with working on the code for our product (called the PathFinder). This article goes over the software portion of our project and more in depth regarding my work.

Hardware

We chose to use the Raspberry Pi as the board for our project. We knew that it would be easier to deal with than a microcontroller since I had previous experience working with a Pi. Python was our go to language for development since it allowed us to rapidly prototype without getting stuck on low-level issues like memory management.

In addition to the Pi, we used several add-ons to gain more functionality:

PiJuice hat for portable battery when on the road.

UCTRONICS OLED display for showing directions and data.

GPS module for keeping track of our current position.

Bluetooth OBD2 scanner for obtaining vehicle metrics.

Directions

The first part I tackled was obtaining directions. From my Place Picker project I knew that Google had an API to obtain directions from point A to point B. I decided to try this approach because it was the first thing that came to my mind and it seemed fairly easy. To gather the directions I just had to format a HTTP request to their API and parse through the returned JSON to get a list of instructions. I created a directions.py module and wrote a method to do the following:

def getDirections(self):

""" Makes an HTTP request to the Google API endpoint and parses through

the JSON response to obtain a list of instructions.

Returns:

string: Text output of json response.

"""

url = 'https://maps.googleapis.com/maps/api/directions/json' # endpoint for Google API

# define parameters for http request

params = dict(

origin = self.user_origin,

destination = self.user_destination,

key = api_key

)

resp = requests.get(url = url, params = params) # response from Google is loaded into resp

directions = json.loads(resp.text) # using a json library to format Google's respone for parsing

# print(directions)

routes = directions['routes']

# Parse json data looking for instructions and maneuvers

for route in routes:

legs = route['legs']

for leg in legs:

steps = leg['steps']

for step in steps:

# instructions from json are in html format

# parsing using BeautifulSoup is required for readable text

ins_html = BeautifulSoup(step['html_instructions'], 'html.parser')

instruction = ins_html.get_text()

coordinate = step['start_location']

self.coordinate_queue.append(coordinate)

self.instruction_queue.append(instruction)

return directions

Note that instead of hard coding an origin and destination I instead passed them in as parameters. This was because my next step was to make sure that end users had an easy way to provide their two locations to our device.

MQTT Protocol

When pitching our idea earlier, one of the selling points for the product was that it would not require the user to download an additional app to their phone to pair with the device. Looking back, that promise made it harder for us to think of a way we could incorporate a user interface into our device. Eventually, my research led me to MQTT as the solution. I chose it for several reasons:

MQTT has a reputation for being lightweight and reliable, two important attributes when considering the product may be used in areas with low network coverage.

The scalability of the protocol allowed us to claim that we could easily increase production if we every hypothetically “went to market”.

I could create a webpage serving as a MQTT publisher that people could go to and send their locations to the PathFinder (technically not an app).

After learning about MQTT, setting up a couple things, and doing a lot of testing, I came up with a simple Flask application that would publish data to an MQTT broker.

# main.py

load_dotenv()

MQTT_SERVER = os.getenv("MQTT_SERVER") # ip address of linode instance

print("Server: " + MQTT_SERVER)

app = Flask(__name__)

@app.route('/', methods = ["POST", "GET"])

@mobile_template('{mobile/}index.html')

def index(template):

if request.method == "POST":

destination = request.form.get("dest")

source = request.form.get("source")

publish.single("destination", destination, hostname=MQTT_SERVER)

publish.single("source", source, hostname=MQTT_SERVER)

print("Published message to " + MQTT_SERVER)

return render_template(template)

if __name__ == "__main__":

from waitress import serve

serve(app, host="0.0.0.0", port="80")

<!-- index.html -->

<!DOCTYPE html>

<head>

<h1 id="title">PathFinder</h1>

<link rel="stylesheet" type="text/css" href="{{ url_for('static', filename= 'css/stylesheet.css') }}" />

</head>

<body>

<div>

<form method="POST" id="input">

<input type="text" placeholder="Enter Current Location" name="source" class="feedback-input" />

<input type="text" placeholder="Enter Destination" name="dest" class="feedback-input" />

<input type="submit" placeholder="Submit" />

</form>

</div>

</body>

I dockerized this app and put it on a public cloud instance (Linode) alongside a MQTT broker container (Eclipse Mosquitto). After that I had to create a HUD.py module, and write some methods to subscribe to the broker. Below is a snippet from that file as an example:

def on_source(self, client, userdata, msg):

""" Defines what should happen upon receiving a message published

on the 'source' channel.

Formats message payload and saves to local variable inputA.

Args:

client (client): MQTT client class instance.

userdata (userdata): A user provided object passed to the on_message callback.

msg (msg): Message class from the broker.

"""

self.inputA = str(msg.payload)

self.inputA = self.inputA[2 : len(self.inputA)-1] # get rid of mqtt formatting

print("inputA = " + self.inputA)

def on_dest(self, client, userdata, msg):

""" Defines what should happen upon receiving a message published

on the 'destination' channel.

Formats message payload anad saves to local variable inputB.

Args:

client (client): MQTT client class instance.

userdata (userdata): A user provided object passed to the on_message callback when a message is received.

msg (msg): Message class from the broker.

"""

self.inputB = str(msg.payload)

self.inputB = self.inputB[2 : len(self.inputB)-1] # get rid of mqtt formatting

print("inputB = " + self.inputB)

I created a diagram to better convey what is going on for our documentation. The user goes to our webpage on their device, fills out the fields, and the PathFinder can obtain the information from the broker.

Here’s what our page looked like. Since the class ended it’s no longer up considering I didn’t want to keep paying to host it in the cloud.

OBD

OBD functionality was mostly handled by my partner. I won’t go into a lot of detail here, but I can say it did take some fiddling with the code to even get a connection to our bluetooth reader. From my understanding, to get the data we wanted it was necessary to continuously reconnect to the scanner until we reached a certain threshold of 100 supported commands. Apparently either the library or the chipset wouldn’t provide us with all the commands available to query data, and the number of commands available would change randomly upon connection.

self.connection = obd.OBD(portstr="/dev/rfcomm0", protocol='6', baudrate=38400, fast=False)

retries = 0

while (len(self.connection.supported_commands) < 100):

self.connection = obd.OBD(portstr="/dev/rfcomm0", protocol='6', baudrate=38400, fast=False)

retries = retries + 1

if self.connection is not None:

self.checkpoint = self.connection.status()

Obtaining data from the scanner once connected was more straightforward. Here’s an example of how we obtained speed in mph:

if self.connection.status() == status.CAR_CONNECTED: #If the car is connected and turned on

speed = self.connection.query(obd.commands.SPEED) #Queries the speed, object with a value in kilometers per hour.

if speed is None:

return 0.00

else:

self.speedInMiles = speed.value.to('mph')

# in the meantime, print results for debugging purposes

return int(self.speedInMiles.magnitude)

Display

We displayed our directions/output onto a small OLED display with the plan to use magnifiying lenses to project it onto the windshield. There were essentially four lines dividing up the screen that we could use to show data. Directions went on the top two and the bottom two were reserved for a simple direction indicator and OBD2 data. We also had to make sure the screen was refreshed periodically (basically changing everything to black) in order to avoid previous lines bleeding over to new ones when changing directions.

Below is an example of one of our methods for displaying the directions:

def show_direction(self, text):

"""

Takes instruction input and splits into 3 lines for display

Args:

text (string): String containing instruction.

"""

words = text.split()

lines = []

wpl = len(words) / 2

prev = 0 # save prev index for iteration

# We want to split the amount of words evenly across the display

# There are two cases: 1 - number of words is divisible by 2

# 2 - number of words is NOT divisible by 2

# In the case of the latter, we simply append the remainder(%) of

# words onto the last line

if(len(words) % 2 == 0):

for i in range (0, 2):

lines.append(words[int(prev):int(prev+wpl)])

prev = prev+wpl # increment prev

else:

for i in range (0,1):

lines.append(words[int(prev):int(prev+wpl)])

prev = prev+wpl # increment prev

lines.append(words[int(prev):int(prev+wpl + (len(words)%2))])

self.clear_disp() # clear display for words

testfont = ImageFont.truetype("/usr/share/fonts/truetype/msttcorefont/Georgia.ttf", 7) # choosing font size

top = 0 # buffer between lines

for i in range (0, 2):

self.draw.text((0, (top + i*8)), ' '.join(lines[i]), font=testfont, fill=255) # loop two times, place text on each line

# refresh the display for actual output

self.refresh()

GPS

To keep track of our current position when in a moving vehicle we used a gps module and python library. There’s only a couple of things we did in the GPS.py module. We wrote a method to get a pair of coordinates in latitude and longitude and then wrote another method to calculate the distance between two coordinate pairs. Going back to the API we were using, each instruction also came with a field that would tell us the start and end coordinates for that instruction. By keeping track of our current distance relative to the next instruction’s starting location, we could ideally know when to change to the next instruction on our display.

def get_coordinates(self):

"""Returns latitude and longitude of current location.

Returns:

(float, float): Tuple of floating point coordinates.

"""

global gpsd

gpsd.next()

return (gpsd.fix.latitude, gpsd.fix.longitude)

def calculate_distance(self, cords1, cords2):

"""Returns a distance in miles given two coordinate points.

Args:

cords1 (float, float): Tuple of point A.

cords2 (float, float): Tuple of point B.

Returns:

float: Distance between point A and point B.

"""

distance = geopy.distance.geodesic(cords1, cords2).miles

return float("{:.2f}".format(distance))

Bringing it together

When going through our first prototype, a HUD.py module was created to handle input from our MQTT broker and calling the directions.py module to obtain instructions from the API. We were focused on ensuring that the directions and OBD2 were functional and as a result they ended up developing independently of one another. Only the HUD.py or OBD.py module could run at any one time on our Pi. To fix that, we later created a main.py module that imported both HUD and OBD. We rewrote both modules and used the threading library in python to allow them to be started in main on different threads at the same time.

def main():

screen = display.Display()

obd = OBD.Obd(screen)

hud = HUD.Hud(screen)

hud.start()

obd.start()

if __name__ == "__main__":

main()

In main we bring in our display module and pass it into both OBD and HUD so they both can print their respective information onto the OLED display. Here’s how the supporting modules fall under the two main ones (OBD and HUD) as well as an overview of what they do:

HUD

Uses directions and GPS modules

During runtime it subscribes to the MQTT broker for a source and destination. Once it has that information it gathers a list of instructions from the API and loads it into memory.

After that it will display the first set of instructions onto the screen. Then it will continuously poll the GPS sensor and update the instructions as needed.

OBD

After connecting to the scanner it will continously gather data for speed and fuel percentage.

Information is then formatted into a more user-friendly string and sent to the display.

Improvements

Our final product was far from polished. It only showed the minimum functionality for our prototype. If I were to list a couple of improvements that could be made from my side of the project it would be the following:

MQTT logic doesn’t take into account multiple devices with multiple users - some form of user authentication is needed. Additionally, the Pi has to be connected to internet to access the broker (we used a cellular hotspot to get around this while on the road).

The manner in which we calculate the distance to go to the next direction is open to a lot of logical flaws (e.g. what if we need to re-route or the road has an unpredictable shape?). A more sophisticated method of keeping track of instructions may be needed.

Overall latency in terms of how long it takes to connect to the OBD2 sensor, get coordinates from GPS, etc. Part of this I believe is due to Python being slower than other languages, but also due to the fact that we use cheaper hardware that has lower quality performance.

While I wasn’t a part of designing the lenses/enclosure for the PathFinder and can’t speak with technical detail on that, I should note that we couldn’t achieve a very clear image on the windshield. If we were to work on improving that aspect of the device, I believe a brighter/larger display would go a long way in improving the projection.

Documentation

During the development of the PathFinder, I came across a convenient way to document our code using Sphinx. At the time of writing this it’s been a few months since we finished the project so I don’t remember the exact commands I used to build the docs. However, I was able to build a static site for our docs that can be viewed here.

All the code for our project can be found in this repo.

To keep things separate, I wrote the code for our MQTT broker and webpage here.

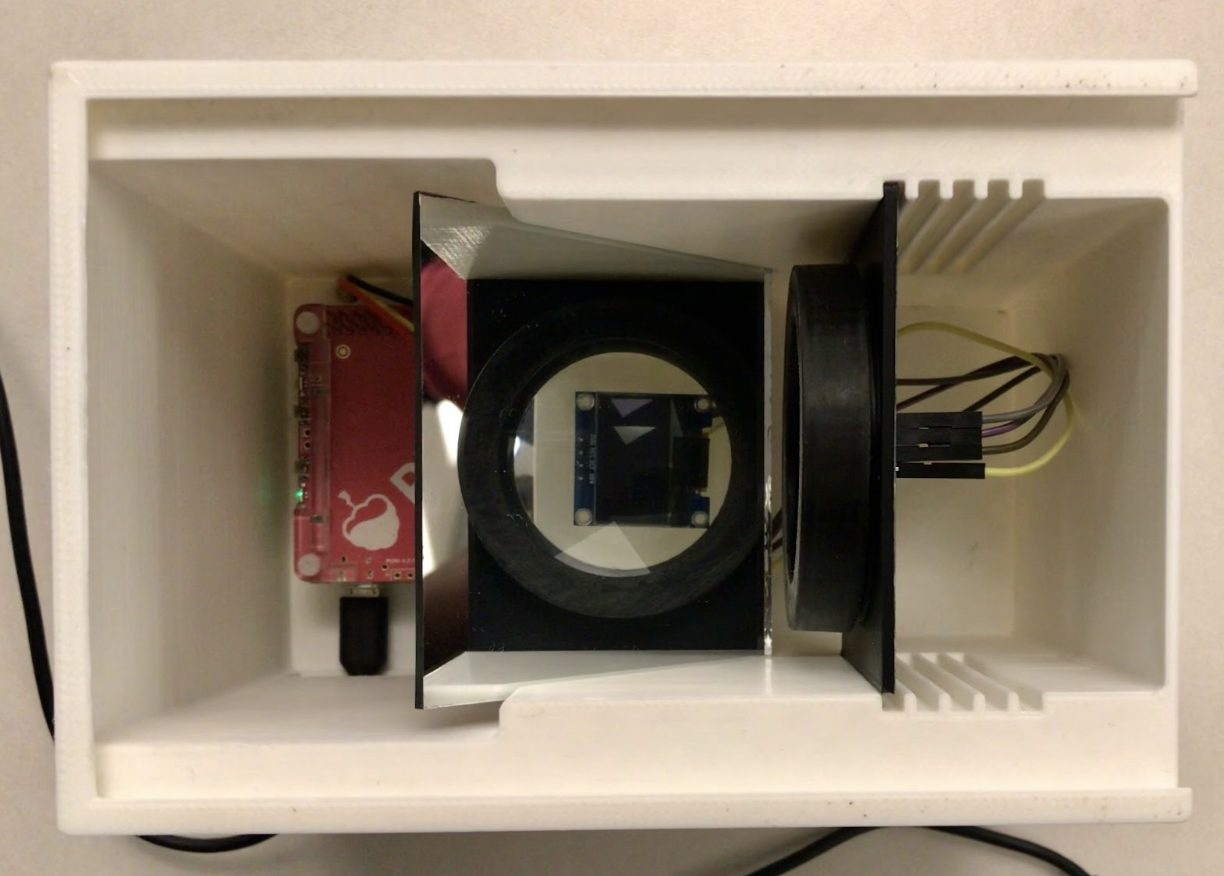

I’ll end this off by showing a couple pictures/videos of what our finished prototype looked like. Hopefully they give a better idea of what our product was supposed to do than my explanation:

Internal view of final prototype

External view of final prototype